This article tries to explain what is GAM, why and when to use GAM, and how to use GAM in practice.

What is GAM

Generalized additive models were first invented by Trevor Hastie and Robert Tibshirani in 1986.

First, let's split the words GAM.

G, represents generalized, means the dependent variable ($Y$) and independent variables ($X$) have non-linear relationship.

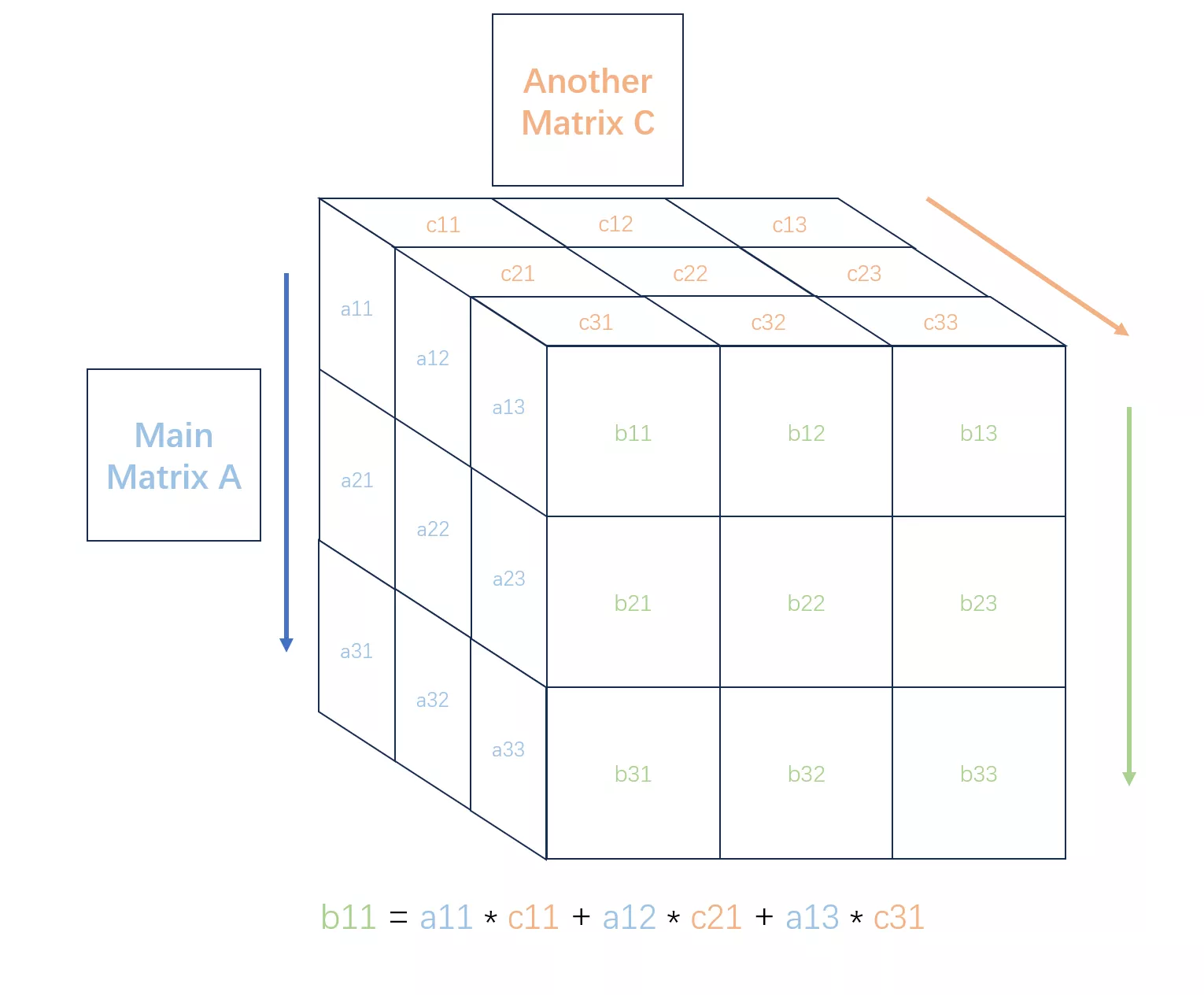

A, represents additive, means that all forms of X ($f_i(X_i)$) will be added together. Comparing to Generalized Linear Model, GAM does not require $f_i(X_i)=k_i X_i$. It has a lager scope.

Now let's look at the basic form of GAM

$$ g(E[Y])=\sum{f_i(X_i)} $$

Here $Y$ is the dependent variable. We care more about the expectation because we assume that with a limit knowledge of independent variables and a simple model, it is impossible to precisely calculate dependent variable. There will always be errors so that we notice the dependent variable will perform some kind of distribution. In linear regression, we assume a normal distribution. In insurance, we assume poisson or tweedie distribution.

$g$ is called link function (related to generalized), it links the expectation and the combination of independent values. When we choose $g(x)=x$, which means $E[y]=\sum{f_i(X_i)}$, the model will change to Additive Model. I think it would be better to use $E[y]=g^{-1}(\sum{f_i(X_i)})$. Here you could think $g^{-1}$ as the Activate Function in Neural Networks. This thinking will also show us the relationship between GAM and other Neural Networks: It is a one layer NN with an activate function and non-linear inputs X!

The term $f_i(X_i)$ denotes smooth, nonparametric functions, which means the shape of the functions are fully determined by the data. Since we do not set a linear or parabolic relationship in advance, the model is able to capture non-linear relationship between $g(E[Y])$ and $X_i$.

Why and When to use GAM

"All models are wrong, but some are useful."

— George E. P. Box

Here are some reasons you should use GAM

- Accuracy

- Interpretability

- Automation

With its non-linear $X$, GAM will also have more accuracy compared to traditional regression model such as linear regression and GLM.

From Above, we know that GAM is a simplified Neural Network (NN), which means it may not have the same accuracy with NN. However, GAM will have the interpretability which NN does not always have. Since the model is additive, we could make statements about the effects of each independent variables. Using SHARP or other visional techniques, it is easy to explain the result to non-professional person.

The article in Multithreaded also mentioned another good reason, automation, which states that the model will find patterns itself and we do not have to know up front what type of functions we need. Here is a question: What if the model find a relationship $g(E[Y])=X_1^{0.998}+X_2^{2.02}$ ? Or $g(E[Y])=X_1^{0.456}+X_2^{1.544}$? Of course you could change the first formula to $g(E[Y])=X_1^1+X_2^2$ for more convenience. However, you could also trust these two results, because "all model are wrong". There is no need that Y must have a nice formula with X in nature. It just happens that this formula fit our data with least loss (locally). Or in other words, it works!

The article also mentioned a reason of regularization, showing that we could control the smoothness to prevent overfitting. However, many models now could use regulator in Loss Functions to address this problem. I prefer accuracy, interpretability, and automation, which could separate GAM with other models.

So when we wish to add more accuracy than traditional linear model, while still keep the interpretability, and we also want the model to find the pattern of data automatically, we could use GAM. By the way, this model is very common in insurance pricing area now.

How to solve the $f_i$?

In fact, $f_i$ could be any function. However, it will take a long time to choose the best $f_i$ if we do not set a boundary. In practice, the model will first list all acceptable basis splines as $F_i$, and use likelihood function to calculate the parameters $w_i$ so that $f_i=w_iF_i$.

Here I use MultiThreaded's example and make some modifications so that it will links to routine in Actuarial Science and Linear Algebra. The penalized likelihood function is given by

$$ 2 l(\alpha, f_1(x_1), \ldots, f_m(x_m)) - \text{penalty} $$

where $l(\alpha, f_1, \ldots, f_m)$ is the standard log likelihood function.

For a binary GAM with a logistic link function, the loss likelihood is defined as

$$ l(\alpha, f_1(x_1), \ldots, f_m(x_m)) = \sum_{i=1}^n (y_i \log \hat{y}_i + (1-y_i) \log (1-\hat{y}_i)) $$

where \(\hat{y}_i \) is given by

$$\hat{y}_i = P(Y=1 | x_1, \ldots, x_m) =\frac {1} { 1 + e^{-(\hat{\alpha} + \sum_{j=1}^m f_j(x_{ij}) )}} $$

The penalty can, for example, be based on the second derivatives

$$ \text{penalty} = \sum_{j=1}^m \lambda_j \int (f''_j(x_j))^2 dx $$

The parameters, $\lambda$s are smoothing parameters. The higher of this value, the smoother the curve will be.

Using a penalty function of the form $\text{penalty} = \sum_{j=1}^m \lambda_j \int (f''_j(x_j))^2 \, dx$ offers several key advantages:

- Non-Negative Penalty: Squaring the second derivative ensures that the penalty is always non-negative, providing a stable constraint that consistently discourages large curvature.

- Emphasis on Large Curvatures: By squaring $f''_j(x_j)$, larger curvature values are penalized more heavily, effectively preventing the function from exhibiting abrupt changes or excessive oscillations.

- Global Smoothness Measurement: The integral aggregates the squared second derivatives over the entire domain, offering a comprehensive measure of the function's overall smoothness rather than focusing on local variations.

- Mathematical Convenience: This form of penalty possesses desirable mathematical properties, such as differentiability, which facilitates optimization using techniques like gradient descent.

- Energy Functional Analogy: Similar to physical systems where energy functionals represent the system's state, the squared second derivative integral can be viewed as an energy measure, promoting solutions that minimize "energy" and thus maintain smoothness.

- Ensuring Continuity and Differentiability: Penalizing the second derivative helps ensure that the resulting function is not only smooth but also possesses continuous first and second derivatives, which is crucial for many applications requiring high levels of differentiability.

In summary, incorporating the squared second derivative and integrating it across the domain effectively enforces smoothness, prevents overfitting by discouraging excessive curvature, and leverages mathematical properties that make the optimization process more manageable. This approach balances fitting the data with maintaining a well-behaved, smooth function.

How to use GAM in programming language?

In SAS, you could use GAM/GAMPL

In R, you could use mgcv

In Python, you could use from pygam import linearGAM

Reference

https://multithreaded.stitchfix.com/blog/2015/07/30/gam/

https://zhuanlan.zhihu.com/p/652483232